Engineering a Fiduciary: Expanding the Regulatory Scope of Algorithmic Bias

By Bao Kham Chau

Bao Kham Chau is a visiting fellow at CornellTech, affiliate at the Harvard Berkman Klein for Internet and Society, and intellectual property litigation associate at Fish & Richardson, P.C. My thanks for their comments to John Setear, Danielle Citron, Jonathan Zittrain, James Grimmelmann, Jordi Weinstock, Yong Jin Park, Tori Ekstrand, Jasmine McNealy, Chang Cai, Bich Van Chau, Hien Thi Nguyen, participants in the Media Law and Policy Scholars Conference, the CornellTech DLI Seminar, and the University of Michigan’s Algorithmic Reparation Workshop, and the Harvard Journal of Law & Technology editorial staff.

Introduction

Online platforms use algorithms to mediate information by curating data and determining whether they are relevant to their user base. While these mathematical models are useful in filtering the cacophony of online noise, they also impose an interpretative layer that reinforces and amplifies existing patterns of power and privileges.[1] If left unregulated, the effect of this algorithmic trojan horse could have grave implications for societal stability in the United States and abroad.[2] Confronted with the offline implications of online speech, American scholars and politicians of all stripes have called for an overhaul of the regulatory frameworks governing the algorithms of BigTech companies.[3] Because these proposals primarily focus on machine learning (“ML”) algorithms like ChatGPT’s deep learning algorithms, however, they often fail to consider the impact of rule-based, non-ML computer code. Indeed, algorithmic bias in the software “scaffolding” — non-ML code responsible for collecting and supplying data to an ML model — could contaminate the entire system and produce discriminatory results even if the input data and ML models were fair and equitable

To improve our understanding of the myth of algorithmic neutrality, which assumes that algorithms are fair, equitable, and objective in contrast to human bias, this Commentary thus recommends expanding the scope of algorithmic regulation to include both rule-based and ML algorithms. Broadening the regulatory scope would also bring a hitherto unexamined aspect of software engineering into the algorithmic justice movement. Specifically, this expansion will allow scholars to interrogate the Agile Software Development process and the resulting computer applications to show where ostensibly technical decisions could encode various biases of Silicon Valley software engineering teams.

Because there are tens of millions of lines of code in any of the digital tools currently in use, it is prohibitively expensive, if not impossible, to audit and ensure that every codebase does not perpetuate and amplify structural inequalities. Consequently, existing external regulations, such as the Americans with Disability Act, might not be enough to address the injuries caused by these algorithmic governors.[4] Instead of only relying on these regulations, it is therefore necessary to promulgate and enforce a code of ethics for the software engineering profession.

This Commentary begins by discussing the shortcomings of current algorithmic regulatory frameworks and recommending the expansion of the scope of algorithmic oversight to include non-ML code. It suggests that this expansion would allow for a more thorough examination of software development techniques, which could cover normative biases that could contaminate the purportedly neutral process and produce discriminatory results. The Commentary then analyzes one instance where a top-down, technocratic framework that was designed to address algorithmic bias fell short. Finally, it concludes with a proposal to complement top-down algorithmic governance proposals with the creation of a bottom-up ethical software engineering framework.

The Scope of Algorithmic Oversight

The current regulatory frameworks are not well suited to deal with both rule-based and ML algorithmic bias. This is because they are designed for the latter type of algorithms. The 1990 Americans with Disability Act (“ADA”), for example, prohibits disability-based discrimination by employers and ensures full access to public and private facilities and services for people with disabilities.[5] Despite many allegations of ADA violations in cyberspace, however, the United States Supreme Court has not yet provided a definitive ruling on the applicability of the ADA to digital accommodations. In a 2021 case, the Supreme Court declined to address the issue while noting that many jurisdictions have recognized that ADA accommodations do apply to software and virtual spaces.[6]

In the absence of clear guidance from the judiciary, executive agencies like the Equal Employment Opportunity Commission (EEOC) have issued guidelines to ensure compliance with the ADA in algorithmic decision-making systems.[7] These guidelines, however, mostly focus on a particular subset of algorithms that use ML to make employment decisions (e.g., using chatbot to filter out users with disability-related employment gaps).[8] Ordinary digital tools and rule-based algorithms remain an afterthought. This regulatory gap presents significant challenges to providing digital accessibility. The American Foundation for the Blind, for example, found that “48% reported accessibility challenges with electronic onboarding forms” and that 50.2% reported that their employer adopted new hardware or software that was not accessible.[9]

To address this regulatory blind spot, it is necessary to incorporate non-ML algorithms into the discussion on algorithmic bias. An expansive definition of algorithmic bias would broaden the scope of inquiry to cover the entire software development process, allowing for a critical examination of the myth of an objective, scientific software development process, which currently pervades the software engineering profession.

Algorithms of Agile Software Development

The Agile Software Development (“ASD”) framework is a cornerstone of this idea of a "scientific" software development process. It outlines a set of iterative practices based on the purportedly user-centric values and principles of the Agile Manifesto.[10] To be considered “agile,” organizations must prioritize requirements-gathering at every stage of the software development lifecycle instead of only doing so at the beginning of a project.[11] This emphasis on requirements-gathering necessitates an incremental approach to software development where an unfinished product is periodically delivered to the end-users who would provide feedback that could be incorporated into the next iteration of the product. The theory behind ASD is simple — by failing early and often, software engineering teams could quickly self-correct mistaken assumptions before they become too expensive to fix.

As ASD became the dominant software development paradigm, computer scientists began studying how companies implement ASD to suggest improvements to the process.[12] Most published literature on ASD, however, does not focus on the non-technical constraints (e.g., employee demographic homogeneity) that could compromise the operationalization of these frameworks.[13] Even those studies that do examine non-functional requirements in ASD do not address the implicit bias of the people gathering the requirements that could contaminate the entire process and produce unwanted programming results.[14] This is because non-functional requirements still focus on various system qualities (for example, performance, scalability, security, usability, and maintainability) that are related to technical requirements. Therefore, in promoting the myth of a self-correcting framework, ASD has shifted contemporary discourse around algorithms away from the software scaffolding integrating with the ML models and from the non-technical stakeholders contributing to the design of such scaffolding.[15]

Such a discursive move casts a shadow on our understanding of algorithmic bias, especially when non-technical stakeholders such as program and product managers are instrumental in shaping a product’s requirements.[16] Indeed, employee demographic uniformity in technology companies could introduce biases such as out-group homogeneity bias to the requirements gathering process, resulting in a discriminatory product specification.[17] Software engineers’ reliance on this flawed product specification would, at the very least, make the eventual code less effective for users not belonging to the in-group.

Biases in Google’s Agile Software Development Practices

The lack of diversity in the workforce of BigTech companies has had negative consequences on their software development processes, resulting in discriminatory software. For instance, a lack of demographic diversity at Google has led to shoddy development of accessibility products. Although the company workforce has diversified in the past five years, it is still predominantly staffed with “White+” (46.2%) and “Asian+” (44.8%) employees in 2023. This contrasts with the relative paucity of the number of “Black+” (5.6%), “Hispanic/Latinx+” (7.3%), and “Native American+” (0.8%) employees. In the same timeframe, Google had 66.1% male employees compared to only 33.9% female employees. Finally, in 2023, there were only 6.5% of Google employees who self-identified as having a disability and only 7.0% of employees who self-identify as LGBTQ+ and/or Trans.[18]

This lack of demographic diversity has adversely impacted Google’s ASD process, particularly in the Chrome browser open-source codebase. The requirements documentation in Chrome’s accessibility page, for example, shows a biased focus on technical implementations over accessibility.[19] The overview document explaining Chrome Accessibility on Android neglects to mention how to build features to accommodate users with disabilities.[20] Similarly, the requirements document for an accessibility feature called “Reader Mode”[21] does not discuss how a disabled user would use such a feature. Instead, the section on “Deciding whether to offer Reader Mode” merely discusses if a page is compatible with the feature.[22]

The paucity of actual requirements documentation detailing the needs of the disabled leads to several accessibility bugs within the Chromium software. Without clear guidelines on what a disabled person requires, software engineering teams make incorrect assumptions about the user’s behavior and produce features based on those faulty assumptions. One Android accessibility bug, for example, crashed the entire Chrome application because the software engineering team did not anticipate that the accessibility feature would be used on Android.[23] When engineers tried to fix this bug as part of the maintenance phase of ASD, demographic homogeneity once again reared its ugly head. The developers’ comments on the bug showed that they did not even consider that Android users might need this accessibility feature:

Comment 6 … I don’t think recording events [feature] was ever setup on Android … we should probably do a quickfix by removing this button on Android in the interim

Comment 7 … That's right—recording events was never set up on Android … it looks like we are making some assumptions that aren’t true on Android.[24]

These comments also reveal another drawback resulting from a homogenous workforce. Instead of understanding why a disabled person would want to use this feature on Android, the programmers’ first gut instinct in this example is to remove the feature and punt on fixing the actual problem. As of the date of this Commentary, this bug has not been resolved.

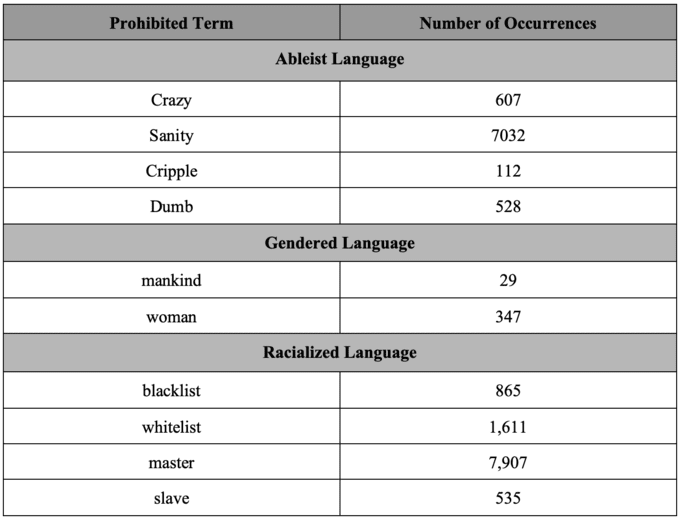

Despite recent efforts by Google and other tech companies to address this problem by issuing several coding guides, this top-down approach is not fully effective. In fact, despite the existence of the “Google developer documentation style guide” and its prohibition of ableist language, there are over 7,000 instances of a specific violation of this prohibition.[25] Indeed, even though the guide recommends not using terms relating to the word “insane” (i.e. “Not recommended – Before launch, give everything a final sanity-check”),[26] the Chrome browser code has at least 7,032 instances of the term “sanity.”[27]

Similar searches for other prohibited languages in Chrome fare no better. As seen above, Google’s own product has failed to adhere to its own guidelines for writing inclusive code. This implies that a top-down regulatory approach, no matter how well meaning, would still leave a regulatory gap.[28]

Engineers as Algorithm Fiduciaries

To fill the gaps in the top-down approach of current regulatory frameworks, this Commentary proposes that the software engineering community should create and enforce ethical standards for software development. This is because, like the original three learned professions in medieval Europe — doctors, lawyers, and the clergy — software engineers are responsible for a part of their clients’ self.[29] Doctors were responsible for their clients’ physical health; lawyers were supposed to protect their clients from social ills; and priests were shepherds of their clients’ spiritual wellbeing. Because of their responsibilities, each of these three learned professions owes a fiduciary duty to their clients and must take actions to look after their clients’ welfare. Similarly, in the digital age, software engineers could assume the stewardship of consumers’ digital self.

Indeed, because “[t]he digital self is constructed solely through online interaction without the intervention of nonverbal feedback and the influence of traditional environmental factors,” it is heavily dependent on the technical choices that the software engineers make. Preventing online avatars from showing sexual orientation, for example, would severely constrain one’s digital expressive autonomy.[30] Because these types of engineering decisions directly impact how one’s digital self could manifest in cyberspace, it is thus logical to expect that software engineers should take actions to promote their clients’ digital wellbeing. Similar to the original three learned professions, this then necessitates the need to impose a fiduciary duty on the software engineering profession.

Imposing a fiduciary duty on software engineers would also provide benefits not available to external regulation. In the medical profession, “the complexity of the knowledge base and skills required, especially as technology advance[s], would make regulation by non-professionals difficult.”[31] It is similarly expensive to train external regulators so that they have the requisite expertise in computer science and statistics to meaningfully interrogate algorithmic results.[32] Additionally, some digital technologies could be used as coercive tools by government, and self-regulation is desirable to maintain the profession’s independence and resist governmental mandates that undermine the rule of law.[33] Thus, while self-regulation alone would not fully address the issues of algorithmic bias, it could represent a significant step towards the ultimate and necessary goal of drafting a regulatory framework built for the new digital age.

Notes

[1] See, e.g., Cathy O’Neil, Weapons of Math Destruction: How Big Data Increases Inequality and Threatens Democracy (2016) (surveys many mathematical models and algorithms that use bias data —for example, data on past successful engineering hires, which could favor male engineers because there were more male than female engineers in the past – to show that algorithms do not necessarily produce fair, objective, or unbiased results); Safiya Umoja Noble, Algorithms of Oppression: How Search Engines Reinforce Racism (2019) (discusses how search engine algorithms encode sexist and racist attitudes of their developers, which then produce discriminatory outputs such as the showing of a pornographic website as the first result for the keywords “black girls”); Jenny L. Davis, Apryl Williams, & Michael W. Yang, Algorithmic Reparation, 8 Big Data & Soc’y 1 (2021) (explains that machine learning algorithms reproduce inequality because 1) they can be products of unjust goals rooted in discriminatory and/or illegal priorities; 2) they can be derived from nonrepresentative data; and/or 3) they can use bias proxies).

[2] See, e.g., Rebecca J. Hamilton, Governing the Global Public Square, 62 Harv. Int’l L. J. 117, 117–19 (2021); David Kaye, Speech Police: The Global Struggler to Govern the Internet 47 (2019).

[3] See, e.g., Danielle Keats Citron and Benjamin Wittes, The Internet Will Not Break: Denying Bad Samaritans § 230 Immunity, 86 Fordham L. Rev. 401 (2017) (recommending a revision of § 230 immunity for Internet companies); Genevieve Lakier & Nelson Tebbe, After the “Great Deplatforming”: Reconsidering the Shape of the First Amendment, Law & Political Economy [LPE] Project (Mar. 1. 2021), https://perma.cc/56F3-KMBE (advocating for “a public battle that includes the courts and legislatures and corporations over the meaning and scope of democratic speech on the platforms”); Ted Cruz, Sen. Cruz: We Have An Obligation To Defend The First Amendment Right Of Every American On Social Media Platforms, Senator Ted Cruz (Apr. 12, 2018), https://www.cruz.senate.gov/newsroom/press-releases/sen-cruz-we-have-an-obligation-to-defend-the-first-amendment-right-of-every-american-on-social-media-platforms (advocating for the regulation of Internet companies to ensure free speech); Eugene Volokh, Treating Social Media Platforms Like Common Carriers?, 1 J. Free Speech L. 377 (2021) (advocating for treating certain functionalities of social media platforms as common carriers and regulating them as such); & David S. Rubenstein, The Outsourcing of Algorithmic Governance, Y. J. Reg. Blog, https://www.yalejreg.com/nc/the-outsourcing-of-algorithmic-governance-by-david-s-rubenstein/ (Jan. 19, 2021) (advocating for AI ethical governance); Jacob Kovacs-Goodman & Alice Shoenauer Sebag, Assessing Online True Threats and Their Impacts: The New Standard of Counterman v. Colorado, 37 Harv. J. L. & Tech. Dig., 2023 (examining the Supreme Court’s new recklessness standard for true threats and suggesting pursuing different level of ex ante or ex post regulations) (available at https://jolt.law.harvard.edu/digest/assessing-online-true-threats-and-their-impacts).

[4] See Kate Klonick, The New Governors: The People, Rules, and Processes Governing Online Speech, 131 Harv. L. Rev. 1598 (2018) (characterizing online platforms as the “new governors”).

[5] Americans with Disabilities Act of 1990, 42 U.S.C. § 12101 et seq. (1990).

[6] Biden v. Knight First Amendment Inst. at Columbia Univ., 141 S. Ct. 1220 (2021).

[7] See EEOC, The Americans with Disabilities Act and the Use of Software, Algorithms, and Artificial Intelligence to Assess Job Applicants and Employees (2022), https://www.eeoc.gov/laws/guidance/americans-disabilities-act-and-use-software-algorithms-and-artificial-intelligence.

[8] Id.

[9] Arielle Silverman et al., Technology and Accommodations: Employment Experiences of U.S. Adults Who Are Blind, Have Low Vision, or Are Deafblind, Am. Found. Blind (2022), https://www.afb.org/sites/default/files/2022-01/AFB_Workplace_Technology_Report_Accessible_FINAL.pdf.

[10] Kent Beck et al., 2001. Manifesto for Agile Software Development (last accessed Sep. 22, 2023), https://agilemanifesto.org/.

[11] See Jory MacKay, Software Development Process: How to Pick The Process That’s Right For You, Plainio (last accessed Sep. 23, 2023), https://plan.io/blog/software-development-process/ (discussing many variations of ASD, some with five to seven phases).

[12] See, e.g., Mohamad Kassab, The Changing Landscape of Requirements Engineering Practices Over The Past Decade, 23rd IEEE Int’l Requirements Eng’g Conf. (2015); Darrell Rigby, Jeff Sutherland & Hirotaka Takeuchi, Embracing Agile, Harv. Bus. Rev. (2016).

[13] Irum Inayat et al., A Systematic Literature Review on Agile Requirements Engineering Practices and Challenges, 51 Computs. Hum. Behav. 915 (2015).

[14] See, e.g., Aleksander Jarzębowicz and Paweł Weichbroth, A Qualitative Study on Non-Functional Requirements in Agile Software Development, 9 IEEE Access 40458 (2021); Dan Turk, Robert France, and Bernhard Rumpe, Assumptions Underlying Agile Software-Development Processes, 16 J. Database Mgmt. 62 (2005).

[15] See, e.g., Lina Lagerberg et al., The impact of agile principles and practices on largescale software development projects, 2013 ACM / IEEE International Symposium on Empirical Software Engineering and Measurement 348 (2013); Xu Bin et al., Extreme Programming in Reducing the Rework of Requirement Change, 3 Canadian Conf. on Electrical & Comput. Eng’g 1567 (2004).

[16] Elizabeth Bjarnason, Krzysztof Wnuk & Björn Regnell, A Case Study on Benefits and Side-Effects of Agile Practices in Large-Scale Requirements Engineering, AREW ‘11: Procs. 1st Workshop on Agile Requirements Eng’g 1 (2011).

[17] Bao Kham Chau, Governing the Algorithmic Turn: Lyft, Uber, and Disparate Impact, Wm. & Mary Ctr. L. & Ct. Tech. (2022).

[18] Google, Google Diversity Annual Report 2023 (2023), https://static.googleusercontent.com/media/about.google/en//belonging/diversity-annual-report/2023/static/pdfs/google_2023_diversity_annual_report.pdf?cachebust=2943cac.

[19] See Chromium Open Source, overview.md – Chromium Code Search (last accessed Sep. 22, 2023), https://source.chromium.org/chromium/chromium/src/+/main:docs/accessibility/overview.md.

[20] Chromium Open Source, Chromium Bugs (last accessed Sep. 22, 2023), https://bugs.chromium.org/p/chromium/issues/detail?id=1312305.

[21] Reader Mode offers a simplified version of the original web page for visually-impaired users.

[22] Chromium Open Source, Reader Mode on Desktop Platforms (last accessed Sep. 22, 2023), link.

[23] Chromium Open Source, Crash in Accessibility (last accessed Sep. 22, 2023), https://bugs.chromium.org/p/chromium/issues/detail?id=1312305.

[24] Id.

[25] Google, Google Developer Documentation Style Guide (last accessed Sep. 22, 2023), https://developers.google.com/style/inclusive-documentation.

[26] Id.

[27] See Chromium Open Source, Chromium Source Code Search (last accessed Sep. 22, 2023), https://source.chromium.org/search?q=sanity&ss=chromium%2Fchromium%2Fsrc. Note, it is not possible to obtain an exact count via this interface because the number constantly changes.

[28] See Jack Balkin, The Fiduciary Model of Privacy, 134 Harv. L. Rev. F. 11 (2020).

[29] Elliot Freidson, Professional Powers: A Study of The Institutionalization of Formal Knowledge (1986).

[30] Shanyang Zhao, The Digital Self: Through the Looking Glass of Telecopresent Others, 28 Symbolic Interaction 387 (2005).

[31] Sylvia R. Cruess and Richard L. Cruess, The Medical Profession and Self-Regulation: A Current Challenge, 7 Ethics J. Am. Med. Assoc. 1 (2005).

[32] See, e.g., Sean Burch, “Senator, We Run Ads”: Hatch Mocked for Basic Facebook Question to Zuckerberg, Wrap (last accessed Sep. 22, 2023), https://www.thewrap.com/senator-orrin-hatch-facebook-biz-model-zuckerberg/.

[33] See, e.g., Lev Grossman, Inside Apple CEO Tim Cook’s Fight With the FBI, Time (last accessed Sep. 22, 2023), https://time.com/4262480/tim-cook-apple-fbi-2/; Maya Wang, China’s Techno-Authoritarianism Has Gone Global Washington Needs to Offer an Alternative, Hum. Rights Watch (Apr. 8, 2023), https://www.hrw.org/news/2021/04/08/chinas-techno-authoritarianism-has-gone-global.