AI as the New Front Door to Legal Services: What Google’s AI Overview Means for Law Firm Visibility and Professional Responsibility

By Dean (Dejan) Cook - Edited by Shriya Srikanth

Dean (Dejan) Cook is the founder of Legal Edge (www.legaledge.marketing), where he advises law firms on ethical, data-informed approaches to digital visibility and client communication. His recent work focuses on how AI-mediated research affects access to legal services and public understanding of the legal profession.

I. Introduction

For many potential clients, Google is the first and often the only gateway to legal information. With the rollout of Google’s AI Overview feature, search is shifting from a list-of-links model to an answer-first model. AI-generated summaries now appear at the top of the results page, frequently before any individual law firm website is shown.

When someone searches “Do I need a lawyer for a first DUI in Texas?” or “How do I file for divorce in Michigan?”, the AI Overview may provide a synthesized explanation immediately. In many cases, that explanation may be that all the users read.

This shift has significant implications for how clients discover and evaluate lawyers. It also affects how attorneys satisfy their obligations under professional responsibility rules governing advertising, client communications, and the avoidance of misleading statements. When AI systems act as intermediaries between lawyers and the public, decisions about how legal information is selected, summarized, and presented become matters of legal ethics and public policy, not merely marketing strategy.

This Commentary draws on a review of fifty U.S. law firm websites to examine how Google’s AI Overview currently uses, or ignores, firm-authored legal content. It argues that AI-mediated visibility should be treated as an emerging professional responsibility issue for law firms, regulators, and legal educators.

II. How AI Overview Mediates Legal Information

Google’s AI Overview generates a short narrative response to a user’s query and cites a small number of web pages as underlying sources. In practice, these summaries often function as the endpoint of a search session rather than a gateway to further reading.

Empirical research on “zero-click” search behavior suggests that most Google searches now end without a click to a traditional result. [1] Estimates vary by query type, but informational searches are particularly likely to conclude once users believe they have received a sufficient answer on the results page itself. AI Overview intensifies this trend by offering longer and more coherent responses than traditional featured snippets. [2]

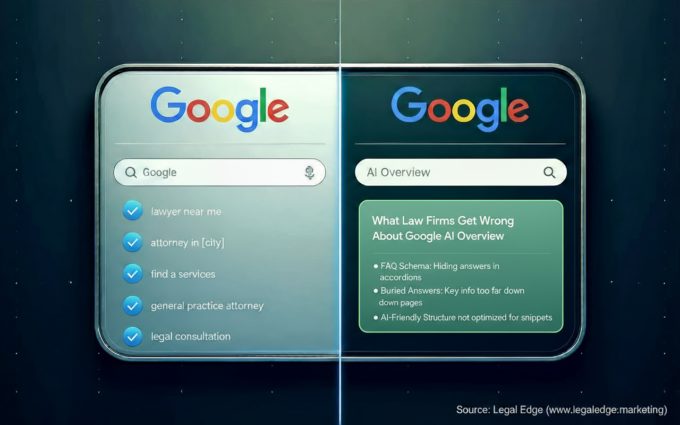

When AI Overview describes legal concepts, it necessarily selects among competing sources. The system must decide which pages to draw from, which passages to quote or paraphrase, and which sites to list as references. In many cases, law firms had the correct legal answer on their websites and often provided more detail than other sources. Despite that, AI Overview did not always rely on those pages. Instead, it frequently cited websites that explained the same legal rule in a shorter and more direct way, or that presented the information in a format that made the answer easy to identify. This suggests that accuracy alone does not determine which sources are surfaced. How clearly legal information is written and how simply it is organized can play a decisive role in whether it is used by AI-generated search results.

From the perspective of a prospective client, this distinction is largely invisible. The answer appears under Google’s branding, with limited source attribution. From the perspective of a law firm, however, inclusion or exclusion at this stage determines whether the firm’s expertise is visible at all.

III. A Study of Fifty Law Firm Websites

To better understand how law firm content interacts with AI Overview, we reviewed fifty law firm websites across a range of practice areas, firm sizes, and geographic markets. For each firm, we identified pages likely to answer common client questions, such as “How do I file for divorce in this state?” or “What are the penalties for a first-offense DUI?” We then conducted mobile Google searches with AI Overview enabled us to see whether those pages were cited and, if not, what types of sources were selected instead.

The purpose of this review was not to measure ranking changes with econometric precision. Rather, it was to identify recurring patterns that affect the quality of legal information available to the public. Several consistent themes emerged.

First, many pages buried the core answer to a legal question beneath lengthy introductions or marketing-oriented language. AI Overview tended to favor pages that stated the relevant rule or process clearly and concisely near the top. Pages that opened with one or two direct sentences answering the question were cited more often than pages that began with general commentary about stress, uncertainty, or the value of hiring a lawyer.

Second, relatively few firms structured their content in ways that made questions and answers easy for machines to identify. Only a minority used FAQ-style headings, concise summaries, or structured data such as FAQPage schema. In contrast, pages cited by AI Overview often included explicit questions followed immediately by brief and self-contained answers.

Third, many firms failed to clearly signal authorship, expertise, or accountability. A substantial portion of blogs and guides appeared without attorney bylines, review statements, or citations to statutes and official sources. For a topic that falls squarely within Google’s “Your Money or Your Life” category, this absence of attribution is particularly consequential.

“Your Money or Your Life,” often abbreviated YMYL, is Google’s term for categories of content that can materially affect a user’s financial stability, legal rights, health, or personal safety. [3] the concept originates in Google’s Search Quality Rater Guidelines, which are used to train and evaluate the systems that assess search result quality. Legal information is explicitly treated as YMYL content because inaccuracies can cause users to lose rights, miss deadlines, or misunderstand legal obligations. As a result, Google’s guidelines place heightened emphasis on demonstrable expertise, clear authorship, and trustworthiness for legal topics. [4] Pages that lack these signals may be treated as lower quality even when the underlying information is accurate.

Fourth, local landing pages were frequently thin and generic. Many consisted of little more than a city name and a prompt to contact the firm. These pages rarely appeared as AI Overview sources. Pages that included jurisdiction-specific details, such as local court procedures or statutory variations, were more likely to be surfaced.

Finally, some sites suffered from poor readability and technical performance on mobile devices. Long blocks of dense legal prose, intrusive pop-ups, and slow loading times made content difficult for human readers to navigate.

Pop-ups, delayed loading, and similar design features can interfere with AI systems for many of the same reasons they frustrate human readers. AI-generated summaries depend on identifying the main body of a page and isolating short passages that clearly answer a user’s question. When content loads slowly, the primary text may not be immediately visible or may appear only after scripts and interactive elements run. In those cases, automated systems may fail to capture the full answer or may rely on partial or out-of-context text.

When legal content is visually or structurally obscured by consent banners, subscription prompts, or chat widgets, it becomes harder for automated systems to distinguish core informational content from surrounding interface elements. This increases the likelihood that no single, clean passage will be identified as a reliable answer. As a result, AI systems may favor other sources where the relevant information appears quickly, plainly, and without obstruction, even if the underlying legal analysis is less thorough.

Taken together, these patterns suggest that many law firm websites are not well adapted to an AI-mediated search environment. This has consequences not only for firm visibility, but also for the quality of legal information presented to the public.

IV. Professional Responsibility and Policy Implications

At first glance, these findings might appear to raise only marketing concerns. They implicate core principles of professional responsibility.

Rules governing lawyer advertising and communications generally prohibit false or misleading statements and require that information about a lawyer’s services do not create unjustified expectations. [5] Historically, these rules focused on what lawyers themselves said in advertisements, brochures, or website copy. AI Overview complicates this framework. When search engines synthesize and paraphrase legal content, lawyers’ words may reach the public through an algorithmic intermediary they do not control.

The implications of this form of algorithmic intermediation are significant, even if formal disciplinary exposure remains limited under existing rules. Law firms are unlikely to be held responsible for every way a search engine paraphrases publicly available content, because existing professional responsibility rules were drafted with direct lawyer communications in mind.

However, other risks are more immediate. AI-generated summaries may omit important conditions, exceptions, or jurisdictional limits while presenting the remaining information in a confident and authoritative tone. Users may reasonably assume that such summaries reflect a vetted legal consensus, particularly when the underlying sources include law firm websites.

There is also reputational risk. Even when a firm does not control the final wording, users may associate the summarized answer with the firm’s expertise or perceived advice. This can create confusion about the scope of the firm’s role and the reliability of the information provided.

Algorithmic intermediation further complicates the boundary between general legal information and legal advice. AI-generated responses may feel personalized when users ask conversational questions, which can prompt reliance that resembles reliance on legal advice even when no attorney-client relationship exists.

Finally, there is a longer-term regulatory risk. As AI-generated search results become a primary means by which the public encounters legal information, regulators may revisit advertising and communication rules to address gaps in accountability.

There is also a distributive dimension. Firms that invest in clear, well-structured, and well-attributed content are more likely to be surfaced by AI Overview and thus shape the information environment for communities. Smaller or under-resourced firms may become effectively invisible online, even if they provide high-quality legal services offline.

Although many users do not click through linked websites, inclusion in AI Overview still shapes which firms and perspectives users encounter. Even without a click, firms cited in AI-generated summaries benefit from visibility, perceived authority, and name recognition. Users may remember a firm’s name or return to it later when they decide to seek representation.

In this sense, AI Overview functions less as a referral mechanism and more as a gatekeeper of professional legitimacy. The access-to-justice concern is not limited to lost website traffic, but to the risk that AI-mediated summaries disproportionately amplify the voices of larger or more digitally sophisticated firms, narrowing the range of legal perspectives shaping public understanding. [6]